Canal简介

Mysql初始化

初始化数据库表

1 | /* |

设置日志模式

对于自建 MySQL , 需要先开启 Binlog 写入功能,配置 binlog-format 为 ROW 模式,my.cnf 中配置如下

1 | [mysqld] |

注意:针对阿里云 RDS for MySQL , 默认打开了 binlog , 并且账号默认具有 binlog dump 权限 , 不需要任何权限或者 binlog 设置,可以直接跳过这一步

创建Canal用户

canal的原理是模拟自己为mysql slave,所以这里一定需要做为mysql slave的相关权限

1 | CREATE USER canal IDENTIFIED BY 'canal'; |

针对已有的账户可直接通过grant

Linux 部署

配置Canal

安装

下载所需版本的Canal:下载地址

解压下载的文件

1 | tar -xzf canal.deployer-1.1.5-SNAPSHOT.tar.gz -C /tmp/canal |

配置修改

应用参数

1 | vi conf/example/instance.properties |

1 | ################################################# |

说明

canal.instance.connectionCharset 代表数据库的编码方式对应到java中的编码类型,比如UTF-8,GBK , ISO-8859-1

如果系统是1个cpu,需要将canal.instance.parser.parallel设置为false

启动

1 | sh bin/startup.sh |

查看日志

1 | vi logs/canal/canal.log |

1 | 2013-02-05 22:45:27.967 [main] INFO com.alibaba.otter.canal.deployer.CanalLauncher - ## start the canal server. |

具体instance的日志:

1 | vi logs/example/example.log |

1 | 2013-02-05 22:50:45.636 [main] INFO c.a.o.c.i.spring.support.PropertyPlaceholderConfigurer - Loading properties file from class path resource [canal.properties] |

关闭

1 | sh bin/stop.sh |

Docker 单机部署

本次使用 canal+mysql+rabbitmq方式进行部署

Docker部署情况

以下是Docker 部署各个服务的一个详情

| 容器服务 | IP | 暴漏端口 |

|---|---|---|

| Canal | 172.18.0.20 | 11111 |

| MySQL | 172.18.0.10 | 3306 |

| RabbitMQ | 172.18.0.30 | 567,215,672 |

准备工作

MySql配置文件设置

配置mysql配置文件 my.cnf的挂载文件

1 | [mysqld] |

Cana相关配置

配置canal.properties

配置Canal配置文件canal.properties的挂载文件

1 | ..... |

完整配置文件如下

1 | ################################################# |

配置 instance.properties

配置Canal的数据源配置文件instance.properties的挂载文件,路径在example/instance.properties

1 | ################################################# |

创建Canal日志文件目录

创建一个Canal的日志文件的挂载目录

1 | mkdir -p /tmp/data/canal/logs |

编写Canal消费者

导入POM文件

1 | <dependency> |

代码编写

1 | public class CanalConsumer { |

Docker 任务编排

编写docker-compose配置文件

1 | version: '2' |

启动Docker

1 | docker-compose up -d |

检查环境

检查MySQL

可以使用远程工具连接MySql检查是否能够正常连接

Mysql初始化

参考上面的【Mysql初始化】

检查RabbitMQ

登录RabbitMQ管理界面检查是否能够登录

检查Canal服务

因为是启动后才初始化的 mysql用户,Canal启动连接数据库会失败,因为配置了restart: always,docker容器会不断重启重试。

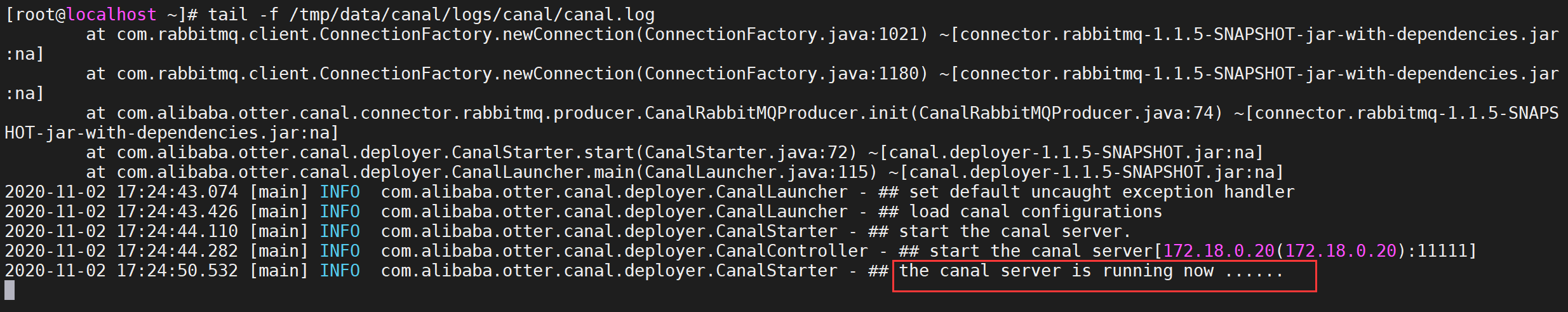

1 | tail -f /tmp/data/canal/logs/canal/canal.log |

出现如下日志表示Canal 启动成功

测试服务

启动消费者测试

修改一条数据检查能够监听到

1 | 等待 message..... |

可以测试通过

关闭消费者测试

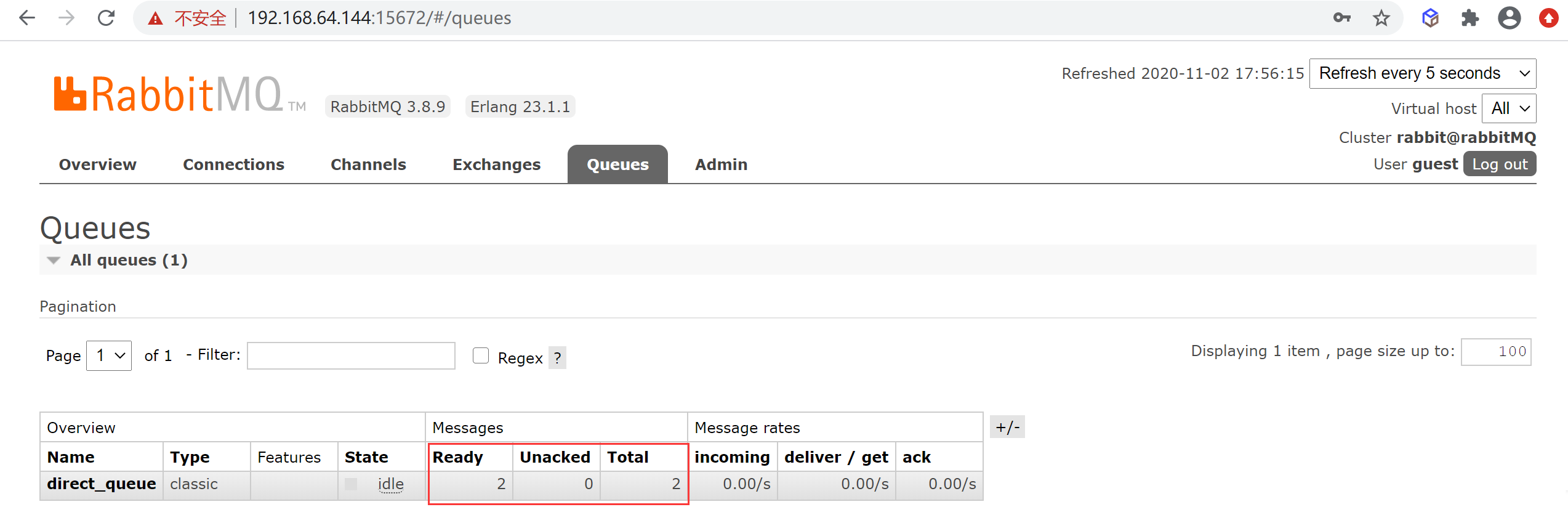

关闭消费者,修改数据后,检查修改后数据是否推送到了MQ

发现有数据堆积,启动消费者后就可以消费

Docker 集群部署

Docker部署情况

以下是Docker 部署各个服务的一个详情

| 容器服务 | IP | 暴漏端口 |

|---|---|---|

| Canal01 | 172.18.0.20 | 11111 |

| Canal02 | 172.18.0.21 | 11112 |

| zookeeper | 172.18.0.5 | 2181 |

| MySQL | 172.18.0.10 | 3306 |

| RabbitMQ | 172.18.0.30 | 567,215,672 |

准备工作

安装Zookeeper

Canal和Kafka集群都依赖于Zookeeper做服务协调,为了方便管理,一般会独立部署Zookeeper服务或者Zookeeper集群。

修改配置文件

参考下Docker单机版【Cana相关配置】

canal.properties修改

因为canal集群需要修改canal的配置

1 | ## canal zk配置,集群用逗号分隔 |

编写Canal消费者

参考上面配置

Docker 任务编排

编写docker-compose配置文件

1 | version: '2' |

启动Docker

1 | docker-compose up -d |

检查环境

参考上文检查环境

测试服务

启动消费者测试

修改一条数据检查能够监听到

1 | 等待 message..... |

可以测试通过

关闭消费者测试

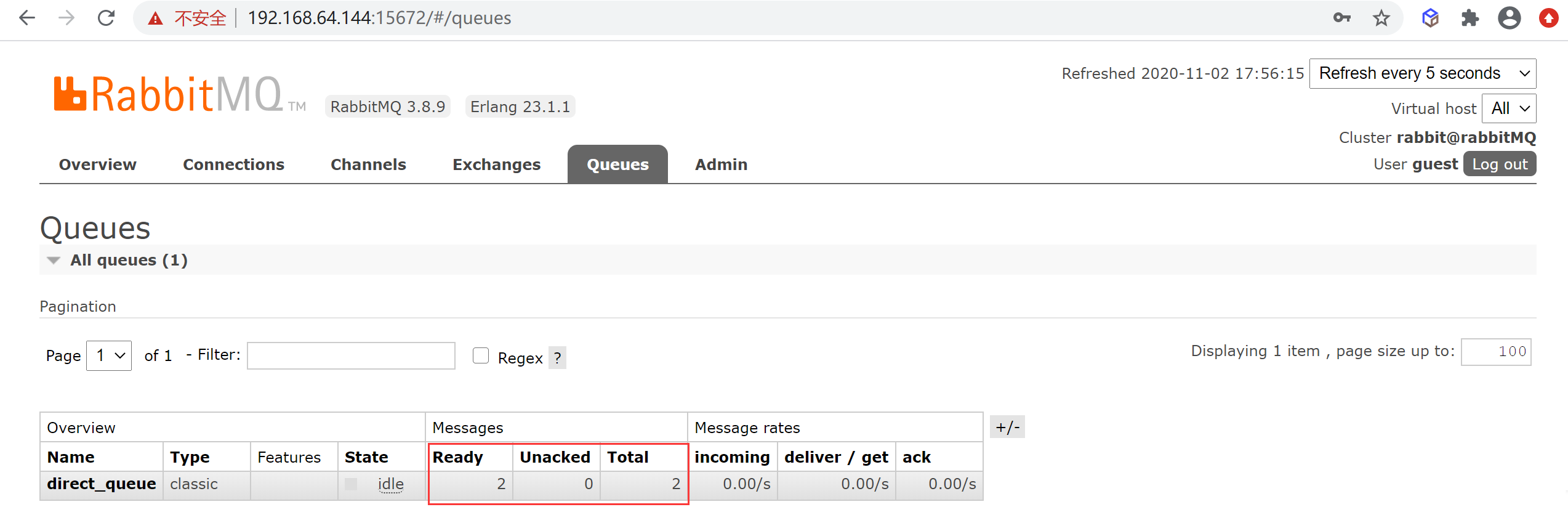

关闭消费者,修改数据后,检查修改后数据是否推送到了MQ

发现有数据堆积,启动消费者后就可以消费

破坏性测试

停掉Canal01

停掉一个Canal01进行测试

1 | docker-compose stop canal01 |

测试是否可以正常监听到

停掉Canal02

关闭第二台Canal

1 | docker-compose stop canal02 |

修改数据不能够进行同步

启动Canal01

启动第一台Canal

1 | docker-compose start canal01 |

修改数据发现能够正常同步

启动Canal02

1 | docker-compose start canal01 |

修改数据发现能够正常同步